A couple of years back we acquired the hosting operations of NetValue Ltd. That included two data center sites: one in Auckland and one in Hamilton. The Auckland center was much bigger and had more equipment and better internet connections, but both had similar network designs.

Early on, were hit with intermittent issues with our networks, at both sites. Sometimes, we had to send someone to physically reboot equipment. That wasn’t great!

So last year, we spent a few months doing a major upgrade at the Auckland data center. We put in new switches, routers, improved the network design, added more bandwidth providers, and made sure we had dual-, redundant-power feeds to all our racks. That made Auckland ready for more growth.

After the Auckland upgrade, we turned our attention to the Hamilton site. The facility itself is excellent, but our network stack there was also old. We wondered if it was smart to spend a lot more money and time upgrading Hamilton too, or if it made more sense to just leverage all the work we had completed to improve the Auckland site, and reduce costs and operational complexity at the same time.

Could we move everything from Hamilton to Auckland without interrupting customers? What were the risks?

What does a hassle-free data center migration look like?

One way to migrate away would be to turn everything off in Hamilton, truck it all up to Auckland, and turn it back on. But that would mean hours of downtime and lots of technical risks.

Instead, we wanted to find a way that was the least disruptive to our clients.

Could we do it with zero downtime?

Could we move things piece by piece and roll back if needed?

Could we keep customer IP addresses and DNS settings the same?

Our starting point

Our Hamilton DC had:

- Three racks of equipment (switches, routers, VM hosts, and special hardware)

- Many servers, both physical and virtual

- Several blocks of IPv4 and IPv6 addresses

- Multiple internet connections from different providers

Building a private network between cities

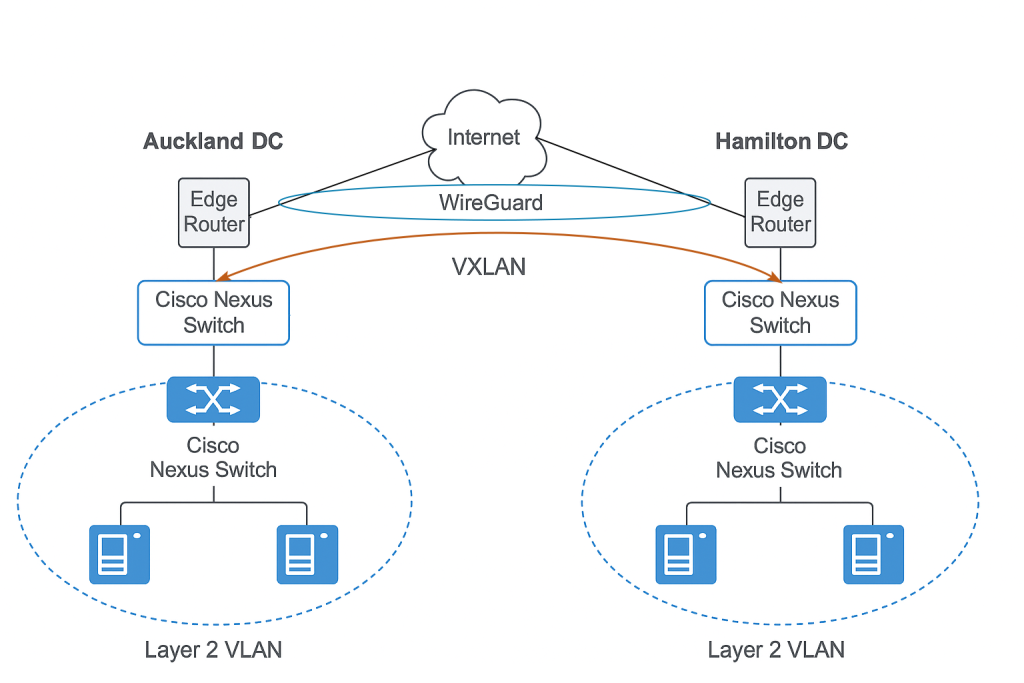

First, we set up a secure network tunnel between Hamilton and Auckland using WireGuard (an encrypted VPN). Then, on top of that, we made a VXLAN overlay, so it looked like both data enters were on the same local network (Layer 2). This meant servers in either place could use the same IP addresses, talk to each other, and no one needed to reconfigure their systems.

The beauty of this approach? We could start shifting network routing to Auckland, even while the servers were still physically in Hamilton.

Network traffic could now arrive in Auckland and get sent through the tunnel to Hamilton.

Why MTU matters

Internet traffic is broken up into “packets,” and each packet has some extra data attached called headers. When tunneling, those headers take up space. WireGuard uses about 60 bytes and VXLAN about 50 bytes per packet.

With a standard Internet MTU of 1500 bytes:

| 1500 – 60 (WireGuard) – 50 (VXLAN) ≈ 1390 bytes |

|---|

To avoid breaking up packets or causing problems, we set the Maximum Transmission Unit (MTU) on all involved servers to 1370 bytes, just to be safe.

The network migration: step-by-step

With the data center spanning network design in mind, we began the implementation in distinct stages.

Step 1: Bridge the network: We connected VLANs between Hamilton and Auckland using VXLAN, so servers kept their original IPs and didn’t need reconfiguring.

Step 2: Shift the gateway: We provisioned a new default gateway in Auckland. The same gateway IP, just with a different MAC address at a different location.

Step 3: Advertise new routes from Auckland: We started telling the internet to send our IP traffic to Auckland by updating BGP routes. Some providers accepted the change automatically – after validating our ROAs, but for others, we had to call them and ask for updates.

Step 4: Withdraw old routes: After things were stable, we stopped advertising the routes from Hamilton. Traffic now entered solely at Auckland.

Step 5: Outbound traffic: At first, servers in Hamilton still sent outgoing traffic through their original Hamilton site gateway. When we shut that down, they re-ARPed and started routing outbound traffic over the VXLAN and out through the new gateway in Auckland.

Moving the hardware

With network traffic handled, we could physically move equipment.

First, we made sure we had space and enough power in Auckland. We did. Our upgrades there had paid off.

A key piece to our ‘zero downtime’ migration strategy was being able to physically migrate servers without downtime.

A zero-downtime migration was an option to us because we run our services on VMs, and each of those VMs is in a fault tolerant cluster.

The cluster setup we use is an active/passive setup. Where there is one live VM and one standby VM.

To live migrate a VM from Hamilton to Auckland we ‘disconnect’ the passive VM in Hamilton, stand up a new passive VM in Auckland, then ‘live migrate’ the VM to Auckland.

Live migration is a process where a VM moves from one physical host to another without interruption (no reboot, no network connections lost). Live migrations work by transmitting a VM’s running state (e.g. all its memory) to the new server.

Live migration also requires that the VM has access to the same file system in both locations. In our case we use open source distributed replication block storage software (DRBD) to replicate disk images.

Because of this setup, we could move things at our own pace and avoid outages.

Corner cases: Colocated hardware

While most of our infrastructure could be migrated live with zero impact, we did have a couple of customer owned/colocated devices that required a physical shutdown to be moved. These couldn’t participate in the clustering and live migration strategy we used for our VMs, so we scheduled some downtime with the client.

In these cases, the key to success is customer communication. We notified these customers well in advance and gave them options to schedule the move to suit their business needs.

During the move, we provided regular updates so they knew when to expect full service restoration. Downtime was minimized through proactive cabling work by a team member ahead of the hardware move, ensuring everything was ready before the rest of the migration crew arrived on site.

Lessons we learned

Get the order right: Don’t try to do everything at once. In our case we completed network changes before starting physical server moves.

Take baby steps: Look to split the work into smaller chunks, so we could do one chunk at a time.

Plan for failure: Unsurprisingly, things don’t always work as expected the first time. For each step in the plan we had clear testing and roll-back procedures.

For example with our network changes, we started with a proof of concept, proving the tunnel would work, and we could route traffic where it needed to go, and that the setup was scaling to our production loads.

Limit risk: Since things become easier the more you do them, we targeted non-customer facing services first for migrations. When migrating IP ranges, for example, we started with unused IP blocks.

Prove the concepts: Our usage of VXLAN over WireGuard for a cross DC production migration isn’t common, so after it was set up we tested it with various workloads to ensure it worked seamlessly for us and kept traffic secure end-to-end.

Communicate: Even with a ‘there won’t be any impact’ change it is good to communicate in advance of changes.

Was it easy: Not really! This took months of planning and skilled work.

What we achieved

One of the biggest successes was what didn’t happen:

No downtime

No customers had to change IP addresses

No DNS records or firewalls needed updating

As of last week, all NetValuehosting services are now running from Auckland. The last servers have been removed from Hamilton—migration complete.

About RimuHosting

RimuHosting has been helping businesses with hosting since 2002—virtual machines, email, storage, DNS, websites, and domain registration.

We’ve spent 20+ years working in data centers around the world. Our latest venture, Infrassembly, helps our clients manage their digital infrastructure inside data centers. We help customers move expensive workloads out of the cloud and into data centers where they have full control.